Filter

Associated Lab

- Druckmann Lab (3) Apply Druckmann Lab filter

- Fitzgerald Lab (1) Apply Fitzgerald Lab filter

- Funke Lab (1) Apply Funke Lab filter

- Hermundstad Lab (3) Apply Hermundstad Lab filter

- Hess Lab (1) Apply Hess Lab filter

- Jayaraman Lab (6) Apply Jayaraman Lab filter

- Lee (Albert) Lab (1) Apply Lee (Albert) Lab filter

- Leonardo Lab (1) Apply Leonardo Lab filter

- Magee Lab (2) Apply Magee Lab filter

- Pachitariu Lab (1) Apply Pachitariu Lab filter

- Pastalkova Lab (1) Apply Pastalkova Lab filter

- Reiser Lab (5) Apply Reiser Lab filter

- Romani Lab (48) Apply Romani Lab filter

- Rubin Lab (2) Apply Rubin Lab filter

- Saalfeld Lab (1) Apply Saalfeld Lab filter

- Scheffer Lab (1) Apply Scheffer Lab filter

- Spruston Lab (1) Apply Spruston Lab filter

- Svoboda Lab (5) Apply Svoboda Lab filter

Associated Project Team

Publication Date

- 2025 (5) Apply 2025 filter

- 2024 (4) Apply 2024 filter

- 2023 (2) Apply 2023 filter

- 2022 (3) Apply 2022 filter

- 2021 (4) Apply 2021 filter

- 2020 (2) Apply 2020 filter

- 2019 (3) Apply 2019 filter

- 2018 (3) Apply 2018 filter

- 2017 (6) Apply 2017 filter

- 2016 (2) Apply 2016 filter

- 2015 (4) Apply 2015 filter

- 2014 (2) Apply 2014 filter

- 2013 (1) Apply 2013 filter

- 2011 (1) Apply 2011 filter

- 2010 (1) Apply 2010 filter

- 2008 (2) Apply 2008 filter

- 2007 (1) Apply 2007 filter

- 2006 (1) Apply 2006 filter

- 2005 (1) Apply 2005 filter

Type of Publication

48 Publications

Showing 31-40 of 48 resultsHuman memory can store large amount of information. Nevertheless, recalling is often a challenging task. In a classical free recall paradigm, where participants are asked to repeat a briefly presented list of words, people make mistakes for lists as short as 5 words. We present a model for memory retrieval based on a Hopfield neural network where transition between items are determined by similarities in their long-term memory representations. Meanfield analysis of the model reveals stable states of the network corresponding (1) to single memory representations and (2) intersection between memory representations. We show that oscillating feedback inhibition in the presence of noise induces transitions between these states triggering the retrieval of different memories. The network dynamics qualitatively predicts the distribution of time intervals required to recall new memory items observed in experiments. It shows that items having larger number of neurons in their representation are statistically easier to recall and reveals possible bottlenecks in our ability of retrieving memories. Overall, we propose a neural network model of information retrieval broadly compatible with experimental observations and is consistent with our recent graphical model (Romani et al., 2013).

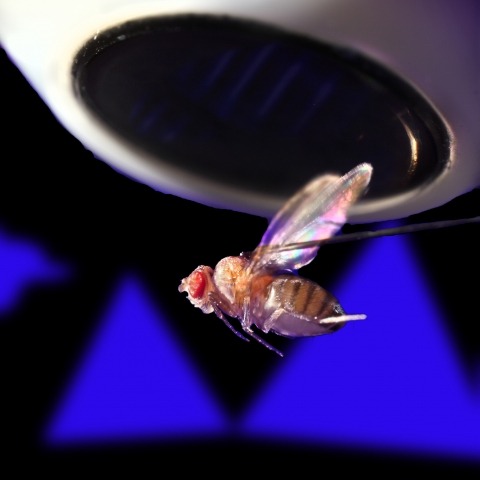

Diverse sensory systems, from audition to thermosensation, feature a separation of inputs into ON (increments) and OFF (decrements) signals. In the Drosophila visual system, separate ON and OFF pathways compute the direction of motion, yet anatomical and functional studies have identified some crosstalk between these channels. We used this well-studied circuit to ask whether the motion computation depends on ON-OFF pathway crosstalk. Using whole-cell electrophysiology, we recorded visual responses of T4 (ON) and T5 (OFF) cells, mapped their composite ON-OFF receptive fields, and found that they share a similar spatiotemporal structure. We fit a biophysical model to these receptive fields that accurately predicts directionally selective T4 and T5 responses to both ON and OFF moving stimuli. This model also provides a detailed mechanistic explanation for the directional preference inversion in response to the prominent reverse-phi illusion. Finally, we used the steering responses of tethered flying flies to validate the model's predicted effects of varying stimulus parameters on the behavioral turning inversion.

A network of excitatory synapses trained with a conservative version of Hebbian learning is used as a model for recognizing the familiarity of thousands of once-seen stimuli from those never seen before. Such networks were initially proposed for modeling memory retrieval (selective delay activity). We show that the same framework allows the incorporation of both familiarity recognition and memory retrieval, and estimate the network's capacity. In the case of binary neurons, we extend the analysis of Amit and Fusi (1994) to obtain capacity limits based on computations of signal-to-noise ratio of the field difference between selective and non-selective neurons of learned signals. We show that with fast learning (potentiation probability approximately 1), the most recently learned patterns can be retrieved in working memory (selective delay activity). A much higher number of once-seen learned patterns elicit a realistic familiarity signal in the presence of an external field. With potentiation probability much less than 1 (slow learning), memory retrieval disappears, whereas familiarity recognition capacity is maintained at a similarly high level. This analysis is corroborated in simulations. For analog neurons, where such analysis is more difficult, we simplify the capacity analysis by studying the excess number of potentiated synapses above the steady-state distribution. In this framework, we derive the optimal constraint between potentiation and depression probabilities that maximizes the capacity.

Power-law scaling in coarse-grained data suggests critical dynamics, but the true source of this scaling often remains unclear. Here, we analyze neural activity recorded during spatial navigation, reproducing power-law scaling under a phenomenological renormalization group (PRG) procedure that clusters units by activity similarity. Such scaling was previously linked to criticality. Here, we show that the iterative nature of the procedure itself leads to the emergence of power laws when applied to heterogeneous, non-interacting units obeying spatially structured activity without requiring critical interactions. Furthermore, the scaling exponents produced by heteregeneous non-interacting units match the observed exponents in recorded neural data. A simplified version of the PRG further reveals how heterogeneity smooths transitions across scales, mimicking critical behavior. The resulting exponents depend systematically on system and population size, predictions confirmed by subsampling the data.

A large variability in performance is observed when participants recall briefly presented lists of words. The sources of such variability are not known. Our analysis of a large data set of free recall revealed a small fraction of participants that reached an extremely high performance, including many trials with the recall of complete lists. Moreover, some of them developed a number of consistent input-position-dependent recall strategies, in particular recalling words consecutively ("chaining") or in groups of consecutively presented words ("chunking"). The time course of acquisition and particular choice of positional grouping were variable among participants. Our results show that acquiring positional strategies plays a crucial role in improvement of recall performance.

Ring attractors are a class of recurrent networks hypothesized to underlie the representation of heading direction. Such network structures, schematized as a ring of neurons whose connectivity depends on their heading preferences, can sustain a bump-like activity pattern whose location can be updated by continuous shifts along either turn direction. We recently reported that a population of fly neurons represents the animal's heading via bump-like activity dynamics. We combined two-photon calcium imaging in head-fixed flying flies with optogenetics to overwrite the existing population representation with an artificial one, which was then maintained by the circuit with naturalistic dynamics. A network with local excitation and global inhibition enforces this unique and persistent heading representation. Ring attractor networks have long been invoked in theoretical work; our study provides physiological evidence of their existence and functional architecture.

Most people have great difficulty in recalling unrelated items. For example, in free recall experiments, lists of more than a few randomly selected words cannot be accurately repeated. Here we introduce a phenomenological model of memory retrieval inspired by theories of neuronal population coding of information. The model predicts nontrivial scaling behaviors for the mean and standard deviation of the number of recalled words for lists of increasing length. Our results suggest that associative information retrieval is a dominating factor that limits the number of recalled items.

In a recent experiment, functional magnetic resonance imaging blood oxygen level-dependent (fMRI BOLD) signals were compared in different cortical areas (primary-visual and associative), when subjects were required covertly to name images in two protocols: sequences of images, with and without intervening delays. The amplitude of the BOLD signal in protocols with delay was found to be closer to that without delays in associative areas than in primary areas. The present study provides an exploratory proposal for the identification of the neural activity substrate of the BOLD signal in quasi-realistic networks of spiking neurons, in networks sustaining selective delay activity (associative) and in networks responsive to stimuli, but whose unique stationary state is one of spontaneous activity (primary). A variety of observables are 'recorded' in the network simulations, applying the experimental stimulation protocol. The ratios of the candidate BOLD signals, in the two protocols, are compared in networks with and without delay activity. There are several options for recovering the experimental result in the model networks. One common conclusion is that the distinguishing factor is the presence of delay activity. The effect of NMDAr is marginal. The ultimate quantitative agreement with the experiment results depends on a distinction of the baseline signal level from its value in delay-period spontaneous activity. This may be attributable to the subjects' attention. Modifying the baseline results in a quantitative agreement for the ratios, and provided a definite choice of the candidate signals. The proposed framework produces predictions for the BOLD signal in fMRI experiments, upon modification of the protocol presentation rate and the form of the response function.

Sex differences in behaviour exist across the animal kingdom, typically under strong genetic regulation. In Drosophila, previous work has shown that fruitless and doublesex transcription factors identify neurons driving sexually dimorphic behaviour. However, the organisation of dimorphic neurons into functional circuits remains unclear.We now present the connectome of the entire Drosophila male central nervous system. This contains 166,691 neurons spanning the brain and ventral nerve cord, fully proofread and comprehensively annotated including fruitless and doublesex expression and 11,691 cell types. By comparison with a previous female brain connectome, we provide the first comprehensive description of the differences between male and female brains to synaptic resolution. Of 7,319 cross-matched cell types in the central brain, 114 are dimorphic with an additional 262 male- and 69 female-specific (totalling 4.8% of neurons in males and 2.4% in females).This resource enables analysis of full sensory-to-motor circuits underlying complex behaviours as well as the impact of dimorphic elements. Sex-specific and dimorphic neurons are concentrated in higher brain centres while the sensory and motor periphery are largely isomorphic. Within higher centres, male-specific connections are organised into hotspots defined by male-specific neurons or the presence of male-specific arbours on neurons that are otherwise similar between sexes. Numerous circuit switches reroute sensory information to form conserved, antagonistic circuits controlling opposing behaviours.

Rodent hippocampus exhibits strikingly different regimes of population activity in different behavioral states. During locomotion, hippocampal activity oscillates at theta frequency (5-12 Hz) and cells fire at specific locations in the environment, the place fields. As the animal runs through a place field, spikes are emitted at progressively earlier phases of the theta cycles. During immobility, hippocampus exhibits sharp irregular bursts of activity, with occasional rapid orderly activation of place cells expressing a possible trajectory of the animal. The mechanisms underlying this rich repertoire of dynamics are still unclear. We developed a novel recurrent network model that accounts for the observed phenomena. We assume that the network stores a map of the environment in its recurrent connections, which are endowed with short-term synaptic depression. We show that the network dynamics exhibits two different regimes that are similar to the experimentally observed population activity states in the hippocampus. The operating regime can be solely controlled by external inputs. Our results suggest that short-term synaptic plasticity is a potential mechanism contributing to shape the population activity in hippocampus.