Main Menu (Mobile)- Block

- Overview

-

Support Teams

- Overview

- Anatomy and Histology

- Cryo-Electron Microscopy

- Electron Microscopy

- Flow Cytometry

- Gene Targeting and Transgenics

- High Performance Computing

- Immortalized Cell Line Culture

- Integrative Imaging

- Invertebrate Shared Resource

- Janelia Experimental Technology

- Mass Spectrometry

- Media Prep

- Molecular Genomics

- Primary & iPS Cell Culture

- Project Pipeline Support

- Project Technical Resources

- Quantitative Genomics

- Scientific Computing

- Viral Tools

- Vivarium

- Open Science

- You + Janelia

- About Us

Main Menu - Block

- Overview

- Anatomy and Histology

- Cryo-Electron Microscopy

- Electron Microscopy

- Flow Cytometry

- Gene Targeting and Transgenics

- High Performance Computing

- Immortalized Cell Line Culture

- Integrative Imaging

- Invertebrate Shared Resource

- Janelia Experimental Technology

- Mass Spectrometry

- Media Prep

- Molecular Genomics

- Primary & iPS Cell Culture

- Project Pipeline Support

- Project Technical Resources

- Quantitative Genomics

- Scientific Computing

- Viral Tools

- Vivarium

We develop methods and tools for the automatic analysis of microscopy image datasets that are too large for manual inspection alone.

Lab webpage: funkelab.github.io

Our lab develops machine learning methods for the life sciences, with a focus on microscopy image analysis.

We are particularly interested in:

- Identification of Structures of Interest in Large Datasets

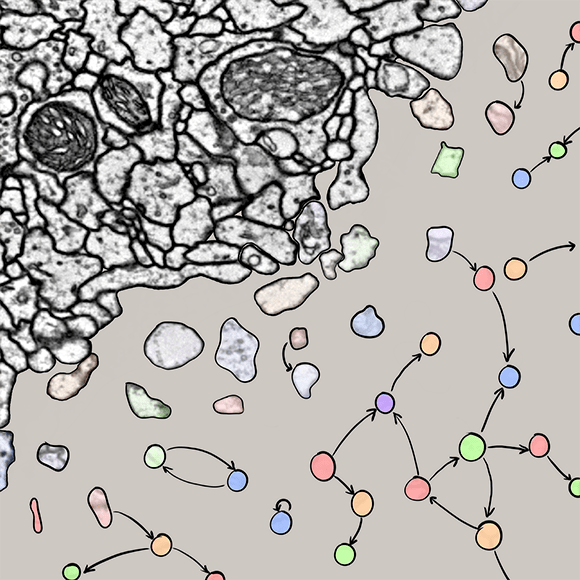

In the field of connectomics, we develop methods to segment neurons, detect synapses, and to classify synapses in very large electron microscopy datasets.

We also work on the segmentation and tracking of cells in live-cell imaging datasets.

- Explainable Machine Learning Methods

We develop methods that use machine learning to identify and visualize subtle patterns in biological datasets. Those methods can reveal previously unknown phenotypical differences, e.g., in image data.

- Mechanistic Machine Learning

To increase the utility and interpretability of machine learning methods, we design models that directly incorporate biophysical constraints and domain knowledge. So far, our models have been used to count fluorophores beyond the diffraction limit and to infer synaptic plasticity rules from behavioral measurements.