Filter

Associated Lab

- Ahrens Lab (64) Apply Ahrens Lab filter

- Aso Lab (1) Apply Aso Lab filter

- Branson Lab (1) Apply Branson Lab filter

- Fitzgerald Lab (1) Apply Fitzgerald Lab filter

- Freeman Lab (5) Apply Freeman Lab filter

- Harris Lab (2) Apply Harris Lab filter

- Jayaraman Lab (2) Apply Jayaraman Lab filter

- Johnson Lab (1) Apply Johnson Lab filter

- Keller Lab (5) Apply Keller Lab filter

- Lavis Lab (3) Apply Lavis Lab filter

- Liu (Zhe) Lab (1) Apply Liu (Zhe) Lab filter

- Looger Lab (7) Apply Looger Lab filter

- Pedram Lab (2) Apply Pedram Lab filter

- Podgorski Lab (3) Apply Podgorski Lab filter

- Schreiter Lab (4) Apply Schreiter Lab filter

- Shroff Lab (2) Apply Shroff Lab filter

- Svoboda Lab (4) Apply Svoboda Lab filter

- Turaga Lab (2) Apply Turaga Lab filter

- Turner Lab (2) Apply Turner Lab filter

- Wang (Shaohe) Lab (2) Apply Wang (Shaohe) Lab filter

- Zlatic Lab (1) Apply Zlatic Lab filter

Associated Project Team

Publication Date

- 2025 (4) Apply 2025 filter

- 2024 (9) Apply 2024 filter

- 2023 (4) Apply 2023 filter

- 2022 (4) Apply 2022 filter

- 2021 (2) Apply 2021 filter

- 2020 (4) Apply 2020 filter

- 2019 (5) Apply 2019 filter

- 2018 (4) Apply 2018 filter

- 2017 (2) Apply 2017 filter

- 2016 (7) Apply 2016 filter

- 2015 (3) Apply 2015 filter

- 2014 (3) Apply 2014 filter

- 2013 (5) Apply 2013 filter

- 2012 (1) Apply 2012 filter

- 2011 (1) Apply 2011 filter

- 2010 (1) Apply 2010 filter

- 2008 (3) Apply 2008 filter

- 2006 (2) Apply 2006 filter

Type of Publication

64 Publications

Showing 31-40 of 64 resultsDue to their small size and transparency, zebrafish larvae are amenable to a range of fluorescence microscopy techniques. With the development of sensitive genetically encoded calcium indicators, this has extended to the whole-brain imaging of neural activity with cellular resolution. This technique has been used to study brain-wide population dynamics accompanying sensory processing and sensorimotor transformations, and has spurred the development of innovative closed-loop behavioral paradigms in which stimulus-response relationships can be studied. More recently, microscopes have been developed that allow whole-brain calcium imaging in freely swimming and behaving larvae. In this review, we highlight the technologies underlying whole-brain functional imaging in zebrafish, provide examples of the sensory and motor processes that have been studied with this technique, and discuss the need to merge data from whole-brain functional imaging studies with neurochemical and anatomical information to develop holistic models of functional neural circuits.

Transient exposure to ketamine can trigger lasting changes in behavior and mood. We found that brief ketamine exposure causes long-term suppression of futility-induced passivity in larval zebrafish, reversing the "giving-up" response that normally occurs when swimming fails to cause forward movement. Whole-brain imaging revealed that ketamine hyperactivates the norepinephrine-astroglia circuit responsible for passivity. After ketamine washout, this circuit exhibits hyposensitivity to futility, leading to long-term increased perseverance. Pharmacological, chemogenetic, and optogenetic manipulations show that norepinephrine and astrocytes are necessary and sufficient for ketamine's long-term perseverance-enhancing aftereffects. In vivo calcium imaging revealed that astrocytes in adult mouse cortex are similarly activated during futility in the tail suspension test and that acute ketamine exposure also induces astrocyte hyperactivation. The cross-species conservation of ketamine's modulation of noradrenergic-astroglial circuits and evidence that plasticity in this pathway can alter the behavioral response to futility hold promise for identifying new strategies to treat affective disorders.

Mood-altering compounds hold promise for the treatment of many psychiatric disorders, such as depression, but connecting their molecular, circuit, and behavioral effects has been challenging. Here we find that, analogous to effects in rodent learned helplessness models, ketamine pre-exposure persistently suppresses futility-induced passivity in larval zebrafish. While antidepressants are thought to primarily act on neurons, brain-wide imaging in behaving zebrafish showed that ketamine elevates intracellular calcium in astroglia for many minutes, followed by persistent calcium downregulation post-washout. Calcium elevation depends on astroglial α1-adrenergic receptors and is required for suppression of passivity. Chemo-/optogenetic perturbations of noradrenergic neurons and astroglia demonstrate that the aftereffects of glial calcium elevation are sufficient to suppress passivity by inhibiting neuronal-astroglial integration of behavioral futility. Imaging in mouse cortex reveals that ketamine elevates astroglial calcium through conserved pathways, suggesting that ketamine exerts its behavioral effects by persistently modulating evolutionarily ancient neuromodulatory systems spanning neurons and astroglia.

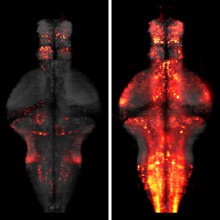

The identification of active neurons and circuits in vivo is a fundamental challenge in understanding the neural basis of behavior. Genetically encoded calcium (Ca(2+)) indicators (GECIs) enable quantitative monitoring of cellular-resolution activity during behavior. However, such indicators require online monitoring within a limited field of view. Alternatively, post hoc staining of immediate early genes (IEGs) indicates highly active cells within the entire brain, albeit with poor temporal resolution. We designed a fluorescent sensor, CaMPARI, that combines the genetic targetability and quantitative link to neural activity of GECIs with the permanent, large-scale labeling of IEGs, allowing a temporally precise "activity snapshot" of a large tissue volume. CaMPARI undergoes efficient and irreversible green-to-red conversion only when elevated intracellular Ca(2+) and experimenter-controlled illumination coincide. We demonstrate the utility of CaMPARI in freely moving larvae of zebrafish and flies, and in head-fixed mice and adult flies.

Our understanding of the input-output function of single cells has been substantially advanced by biophysically accurate multi-compartmental models. The large number of parameters needing hand tuning in these models has, however, somewhat hampered their applicability and interpretability. Here we propose a simple and well-founded method for automatic estimation of many of these key parameters: 1) the spatial distribution of channel densities on the cell’s membrane; 2) the spatiotemporal pattern of synaptic input; 3) the channels’ reversal potentials; 4) the intercompartmental conductances; and 5) the noise level in each compartment. We assume experimental access to: a) the spatiotemporal voltage signal in the dendrite (or some contiguous subpart thereof, e.g. via voltage sensitive imaging techniques), b) an approximate kinetic description of the channels and synapses present in each compartment, and c) the morphology of the part of the neuron under investigation. The key observation is that, given data a)-c), all of the parameters 1)-4) may be simultaneously inferred by a version of constrained linear regression; this regression, in turn, is efficiently solved using standard algorithms, without any “local minima” problems despite the large number of parameters and complex dynamics. The noise level 5) may also be estimated by standard techniques. We demonstrate the method’s accuracy on several model datasets, and describe techniques for quantifying the uncertainty in our estimates.

The dense connectivity in the brain means that one neuron's activity can influence many others. To observe this interconnected system comprehensively, an aspiration within neuroscience is to record from as many neurons as possible at the same time. There are two useful routes toward this goal: one is to expand the spatial extent of functional imaging techniques, and the second is to use animals with small brains. Here we review recent progress toward imaging many neurons and complete populations of identified neurons in small vertebrates and invertebrates.

The processing of sensory input and the generation of behavior involves large networks of neurons, which necessitates new technology for recording from many neurons in behaving animals. In the larval zebrafish, light-sheet microscopy can be used to record the activity of almost all neurons in the brain simultaneously at single-cell resolution. Existing implementations, however, cannot be combined with visually driven behavior because the light sheet scans over the eye, interfering with presentation of controlled visual stimuli. Here we describe a system that overcomes the confounding eye stimulation through the use of two light sheets and combines whole-brain light-sheet imaging with virtual reality for fictively behaving larval zebrafish.

Developments in electrical and optical recording technology are scaling up the size of neuronal populations that can be monitored simultaneously. Light-sheet imaging is rapidly gaining traction as a method for optically interrogating activity in large networks and presents both opportunities and challenges for understanding circuit function.

All multicellular systems produce and dynamically regulate extracellular matrices (ECMs) that play essential roles in both biochemical and mechanical signaling. Though the spatial arrangement of these extracellular assemblies is critical to their biological functions, visualization of ECM structure is challenging, in part because the biomolecules that compose the ECM are difficult to fluorescently label individually and collectively. Here, we present a cell-impermeable small-molecule fluorophore, termed Rhobo6, that turns on and red shifts upon reversible binding to glycans. Given that most ECM components are densely glycosylated, the dye enables wash-free visualization of ECM, in systems ranging from in vitro substrates to in vivo mouse mammary tumors. Relative to existing techniques, Rhobo6 provides a broad substrate profile, superior tissue penetration, non-perturbative labeling, and negligible photobleaching. This work establishes a straightforward method for imaging the distribution of ECM in live tissues and organisms, lowering barriers for investigation of extracellular biology.

Understanding brain function requires monitoring and interpreting the activity of large networks of neurons during behavior. Advances in recording technology are greatly increasing the size and complexity of neural data. Analyzing such data will pose a fundamental bottleneck for neuroscience. We present a library of analytical tools called Thunder built on the open-source Apache Spark platform for large-scale distributed computing. The library implements a variety of univariate and multivariate analyses with a modular, extendable structure well-suited to interactive exploration and analysis development. We demonstrate how these analyses find structure in large-scale neural data, including whole-brain light-sheet imaging data from fictively behaving larval zebrafish, and two-photon imaging data from behaving mouse. The analyses relate neuronal responses to sensory input and behavior, run in minutes or less and can be used on a private cluster or in the cloud. Our open-source framework thus holds promise for turning brain activity mapping efforts into biological insights.