Filter

Associated Lab

- Dudman Lab (2) Apply Dudman Lab filter

- Hermundstad Lab (29) Apply Hermundstad Lab filter

- Jayaraman Lab (9) Apply Jayaraman Lab filter

- Looger Lab (1) Apply Looger Lab filter

- Romani Lab (3) Apply Romani Lab filter

- Rubin Lab (2) Apply Rubin Lab filter

- Schreiter Lab (1) Apply Schreiter Lab filter

- Sternson Lab (1) Apply Sternson Lab filter

- Svoboda Lab (1) Apply Svoboda Lab filter

Associated Project Team

Publication Date

- 2025 (4) Apply 2025 filter

- 2024 (5) Apply 2024 filter

- 2023 (2) Apply 2023 filter

- 2022 (6) Apply 2022 filter

- 2021 (3) Apply 2021 filter

- 2020 (2) Apply 2020 filter

- 2019 (1) Apply 2019 filter

- 2018 (1) Apply 2018 filter

- 2017 (1) Apply 2017 filter

- 2014 (2) Apply 2014 filter

- 2013 (1) Apply 2013 filter

- 2011 (1) Apply 2011 filter

Type of Publication

29 Publications

Showing 11-20 of 29 resultsNeurons throughout the sensory pathway adapt their responses depending on the statistical structure of the sensory environment. Contrast gain control is a form of adaptation in the auditory cortex, but it is unclear whether the dynamics of gain control reflect efficient adaptation, and whether they shape behavioral perception. Here, we trained mice to detect a target presented in background noise shortly after a change in the contrast of the background. The observed changes in cortical gain and behavioral detection followed the dynamics of a normative model of efficient contrast gain control; specifically, target detection and sensitivity improved slowly in low contrast, but degraded rapidly in high contrast. Auditory cortex was required for this task, and cortical responses were not only similarly affected by contrast but predicted variability in behavioral performance. Combined, our results demonstrate that dynamic gain adaptation supports efficient coding in auditory cortex and predicts the perception of sounds in noise.

The ability to adapt to changes in stimulus statistics is a hallmark of sensory systems. Here, we developed a theoretical framework that can account for the dynamics of adaptation from an information processing perspective. We use this framework to optimize and analyze adaptive sensory codes, and we show that codes optimized for stationary environments can suffer from prolonged periods of poor performance when the environment changes. To mitigate the adversarial effects of these environmental changes, sensory systems must navigate tradeoffs between the ability to accurately encode incoming stimuli and the ability to rapidly detect and adapt to changes in the distribution of these stimuli. We derive families of codes that balance these objectives, and we demonstrate their close match to experimentally observed neural dynamics during mean and variance adaptation. Our results provide a unifying perspective on adaptation across a range of sensory systems, environments, and sensory tasks.

Previously, in (Hermundstad et al., 2014), we showed that when sampling is limiting, the efficient coding principle leads to a 'variance is salience' hypothesis, and that this hypothesis accounts for visual sensitivity to binary image statistics. Here, using extensive new psychophysical data and image analysis, we show that this hypothesis accounts for visual sensitivity to a large set of grayscale image statistics at a striking level of detail, and also identify the limits of the prediction. We define a 66-dimensional space of local grayscale light-intensity correlations, and measure the relevance of each direction to natural scenes. The 'variance is salience' hypothesis predicts that two-point correlations are most salient, and predicts their relative salience. We tested these predictions in a texture-segregation task using un-natural, synthetic textures. As predicted, correlations beyond second order are not salient, and predicted thresholds for over 300 second-order correlations match psychophysical thresholds closely (median fractional error < 0:13).

To successfully forage for food, animals must balance the energetic cost of searching for food sources with the energetic benefit of exploiting those sources. While the Marginal Value Theorem provides one normative account of this balance by specifying that a forager should leave a food patch when its energetic yield falls below the average yield of other patches in the environment, it assumes the presence of other readily reachable patches. In natural settings, however, a forager does not know whether it will encounter additional food patches, and it must balance potential energetic costs and benefits accordingly. Upon first encountering a patch of food, it faces a decision of whether and when to leave the patch in search of better options, and when to return if no better options are found. Here, we explore how a forager should structure its search for new food patches when the existence of those patches is unknown, and when searching for those patches requires energy that can only be harvested from a single known food patch. We identify conditions under which it is more favorable to explore the environment in several successive trips rather than in a single long exploration, and we show how the optimal sequence of trips depends on the forager’s beliefs about the distribution and nutritional content of food patches in the environment. This optimal strategy is well approximated by a local decision that can be implemented by a simple neural circuit architecture. Together, this work highlights how energetic constraints and prior beliefs shape optimal foraging strategies, and how such strategies can be approximated by simple neural networks that implement local decision rules.

When foraging for resources, animals must often sample many options that yield reward with different probabilities. In such scenarios, many animals have been shown to exhibit “matching”, an empirical behavioral observation in which the fraction of rewarded samples is the same across all options. While previous work has shown that matching can be optimal in environments with diminishing returns, this condition is not sufficient to determine optimality. Furthermore, while diminishing returns naturally arise when resources in the environment deplete and take time to be replenished, the specific form of diminishing returns depends on the temporal structure and statistics of the replenishment process. Here, we explore how these environmental properties affect whether matching is optimal. By considering an agent that samples different options with fixed sampling rates, we derive the probability of collecting a reward as a function of these sampling rates for different types of environments, and we analytically determine the conditions under which the optimal sampling-rate policy exhibits matching. When all options are governed by the same replenishment dynamics, we find that optimality gives rise to matching across a wide range of environments. However, when these dynamics differ across options, the optimal policy can deviate from matching. In such cases, the rank-ordering of observed reward probabilities depends only on the qualitative nature of the replenishment process, but not on the specific replenishment rates. As a result, the optimal policy can exhibit underor over-matching depending on how rewarding the different options are. We use this result to identify environmental settings under which performance differs substantially between matching and optimality. Finally, we show how fluctuations in these replenishment rates—which can represent either environmental stochasticity or the agent’s internal uncertainty about the environment—can accentuate deviations between optimality and matching. Together, these findings deepen our understand of the relationship between environmental variability and behavioral optimality, and they provide testable experimental predictions across a wide range of environmental settings.

To interpret the sensory environment, the brain combines ambiguous sensory measurements with knowledge that reflects context-specific prior experience. But environmental contexts can change abruptly and unpredictably, resulting in uncertainty about the current context. Here we address two questions: how should context-specific prior knowledge optimally guide the interpretation of sensory stimuli in changing environments, and do human decision-making strategies resemble this optimum? We probe these questions with a task in which subjects report the orientation of ambiguous visual stimuli that were drawn from three dynamically switching distributions, representing different environmental contexts. We derive predictions for an ideal Bayesian observer that leverages knowledge about the statistical structure of the task to maximize decision accuracy, including knowledge about the dynamics of the environment. We show that its decisions are biased by the dynamically changing task context. The magnitude of this decision bias depends on the observer's continually evolving belief about the current context. The model therefore not only predicts that decision bias will grow as the context is indicated more reliably, but also as the stability of the environment increases, and as the number of trials since the last context switch grows. Analysis of human choice data validates all three predictions, suggesting that the brain leverages knowledge of the statistical structure of environmental change when interpreting ambiguous sensory signals.

After finding food, a foraging animal must decide whether to continue feeding, or to explore the environment for potentially better options. One strategy to negotiate this tradeoff is to perform local searches around the food but repeatedly return to feed. We studied this behavior in flies and used genetic tools to uncover the underlying mechanisms. Over time, flies gradually expand their search, shifting from primarily exploiting food sources to exploring the environment, a change that is likely driven by increases in satiety. We found that flies’ search patterns preserve these dynamics even as the overall scale of the search is modulated by starvation-induced changes in metabolic state. In contrast, search induced by optogenetic activation of sugar sensing neurons does not show these dynamics. We asked what navigational strategies underlie local search. Using a generative model, we found that a change in locomotor pattern after food consumption could account for repeated returns to the food, but failed to capture relatively direct, long return trajectories. Alternative strategies, such as path integration or sensory taxis could allow flies to return from larger distances. We tested this by individually silencing the fly’s head direction system, olfaction and hygrosensation, and found that the only substantial effect was from perturbing hygrosensation, which reduced the number of long exploratory trips. Our study illustrates that local search is composed of multiple behavioral features that evolve over time based on both internal and external factors, providing a path towards uncovering the underlying neural mechanisms.

Internal representations are thought to support the generation of flexible, long-timescale behavioral patterns in both animals and artificial agents. Here, we present a novel conceptual framework for how Drosophila use their internal representation of head direction to maintain preferred headings in their surroundings, and how they learn to modify these preferences in the presence of selective thermal reinforcement. To develop the framework, we analyzed flies’ behavior in a classical operant visual learning paradigm and found that they use stochastically generated fixations and directed turns to express their heading preferences. Symmetries in the visual scene used in the paradigm allowed us to expose how flies’ probabilistic behavior in this setting is tethered to their head direction representation. We describe how flies’ ability to quickly adapt their behavior to the rules of their environment may rest on a behavioral policy whose parameters are flexible but whose form is genetically encoded in the structure of their circuits. Many of the mechanisms we outline may also be relevant for rapidly adaptive behavior driven by internal representations in other animals, including mammals.

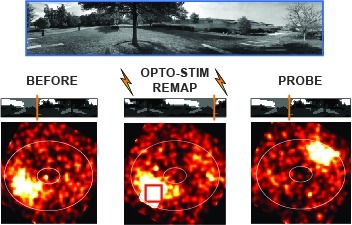

Many animals rely on an internal heading representation when navigating in varied environments. How this representation is linked to the sensory cues that define different surroundings is unclear. In the fly brain, heading is represented by 'compass' neurons that innervate a ring-shaped structure known as the ellipsoid body. Each compass neuron receives inputs from 'ring' neurons that are selective for particular visual features; this combination provides an ideal substrate for the extraction of directional information from a visual scene. Here we combine two-photon calcium imaging and optogenetics in tethered flying flies with circuit modelling, and show how the correlated activity of compass and visual neurons drives plasticity, which flexibly transforms two-dimensional visual cues into a stable heading representation. We also describe how this plasticity enables the fly to convert a partial heading representation, established from orienting within part of a novel setting, into a complete heading representation. Our results provide mechanistic insight into the memory-related computations that are essential for flexible navigation in varied surroundings.

Hunger and thirst have distinct goals but control similar ingestive behaviors, and little is known about neural processes that are shared between these behavioral states. We identify glutamatergic neurons in the peri-locus coeruleus (periLC neurons) as a polysynaptic convergence node from separate energy-sensitive and hydration-sensitive cell populations. We develop methods for stable hindbrain calcium imaging in free-moving mice, which show that periLC neurons are tuned to ingestive behaviors and respond similarly to food or water consumption. PeriLC neurons are scalably inhibited by palatability and homeostatic need during consumption. Inhibition of periLC neurons is rewarding and increases consumption by enhancing palatability and prolonging ingestion duration. These properties comprise a double-negative feedback relationship that sustains food or water consumption without affecting food- or water-seeking. PeriLC neurons are a hub between hunger and thirst that specifically controls motivation for food and water ingestion, which is a factor that contributes to hedonic overeating and obesity.